We had a legacy .NET web-service that uses WSE 3.0. We are the service provider and we have an intermediate authentication server as well as the consumer. We had intermittent mysterious socket time out exceptions.

A thorough network snoop was conducted across 4 network teams (we span across 2 intranet and 2 WAN domains). Everything was healthy with <10ms latency between hops.

It was puzzling and a stroke of genius came by and we decided to check the inputtrace.webinfo and outputtrace.webinfo (default logging configs). Nothing special about it except for the size. Monthly scheduled jobs archival results in file size in excess of 600mb. Some team members had argued that logging mechanism should be asynchronous and should not have any influence on the response SOAP. There's no harm trying to flush out the logs and archive and do a re-test. Sure enough everything was back to < 0.5s

Our corrective action was to increase the frequency of the archival from monthly to weekly.

Showing posts with label performance. Show all posts

Showing posts with label performance. Show all posts

Wednesday, July 28, 2010

Tuesday, April 20, 2010

Resizing array

If you ever had to work with an array that you wouldn't know the size till run-time, you have a couple of options:

int[] myArray = new int[50];

int newArrayCount = 0;

//some biz logic goes here

//determined array size

newArrayCount = myArray.Count;

Array.Resize(ref myArray, newArrayCount);

You can put myArray.Count as the 2nd parameter of the resizing method, up to your preference.

- Clone the old array into the new array (un-necessary overhead)

- Just proceed with a array with redundant trailing allocated memory

- You can resize it with Array.Resize(ref <arrayName>, int newArraySize)

int[] myArray = new int[50];

int newArrayCount = 0;

//some biz logic goes here

//determined array size

newArrayCount = myArray.Count;

Array.Resize(ref myArray, newArrayCount);

You can put myArray.Count as the 2nd parameter of the resizing method, up to your preference.

Tuesday, April 6, 2010

Business objects VS Typed Datasets

I always had this feeling that POCO (Plain old C# objects) will outperform typed datasets & datasets. The main reason why people are using it is only because it lessen development time by a very....... small amount in my opinion. Using business objects are easier to understand and in C# context, the compiler actually do a lot of work for you.

Example in other languages:

public myClass()

{

private String someString;

public String getString()

{

return this.someString;

}

public void setString(String value)

{

this.someString = value;

}

}

you can do this in C#:

public myClass()

{

public String someString { get; set; }

}

That only explains how much it takes to write a Business Object class. Next we go to real work.

I created a simple demo project in VS 2010 (beta), you can use any visual studio to compile this if you want to try it out yourself.

in my Business object class:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace DemoBizObject

{

public class BizObject

{

public int Id { get; set; }

public string Name { get; set; }

public string Desc { get; set; }

public decimal price { get; set; }

public DateTime CreatedDt { get; set; }

public DateTime ModifiedDt { get; set; }

}

}

in my aspx code behind, i did this:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Web;

using System.Web.UI;

using System.Web.UI.WebControls;

using System.Collections.ObjectModel;

using DemoBizObject;

using System.Diagnostics;

using System.Web.UI.DataVisualization.Charting;

namespace DemoWebApp

{

public partial class _Default : System.Web.UI.Page

{

Collection<BizObject> BizObjects = new Collection<BizObject>();

double[] pocotimings = new double[7];

double[] typeddstimings = new double[7];

decimal d = 0.00m;

const int ONE = 1;

const int TEN = 10;

const int ONEHUNDRED = 100;

const int ONETHOUSAND = 1000;

const int TENTHOUSAND = 10000;

const int ONEHUNDREDTHOUSAND = 100000;

const int ONEMILLION = 1000000;

const string LINEBREAK = "<br />";

protected void Page_Load(object sender, EventArgs e)

{

}

protected void Button1_Click(object sender, EventArgs e)

{

this.Label1.Text += "Typed Datasets" + LINEBREAK + LINEBREAK;

this.Label1.Text += ONE +": "+ demoTypedDS(ONE)+ LINEBREAK;

this.Label1.Text += TEN + ": " + demoTypedDS(TEN) + LINEBREAK;

this.Label1.Text += ONEHUNDRED + ": " + demoTypedDS(ONEHUNDRED) + LINEBREAK;

this.Label1.Text += ONETHOUSAND + ": " + demoTypedDS(ONETHOUSAND) + LINEBREAK;

this.Label1.Text += TENTHOUSAND + ": " + demoTypedDS(TENTHOUSAND) + LINEBREAK;

this.Label1.Text += ONEHUNDREDTHOUSAND + ": " + demoTypedDS(ONEHUNDREDTHOUSAND) + LINEBREAK;

this.Label1.Text += ONEMILLION + ": " + demoTypedDS(ONEMILLION) + LINEBREAK + LINEBREAK;

this.Label1.Text += "POCO" + ": " + LINEBREAK + LINEBREAK;

this.Label1.Text += ONE + ": " + demoPOCO(ONE) + LINEBREAK;

this.Label1.Text += TEN + ": " + demoPOCO(TEN) + LINEBREAK;

this.Label1.Text += ONEHUNDRED + ": " + demoPOCO(ONEHUNDRED) + LINEBREAK;

this.Label1.Text += ONETHOUSAND + ": " + demoPOCO(ONETHOUSAND) + LINEBREAK;

this.Label1.Text += TENTHOUSAND + ": " + demoPOCO(TENTHOUSAND) + LINEBREAK;

this.Label1.Text += ONEHUNDREDTHOUSAND + ": " + demoPOCO(ONEHUNDREDTHOUSAND) + LINEBREAK;

this.Label1.Text += ONEMILLION + ": " + demoPOCO(ONEMILLION) + LINEBREAK + LINEBREAK;

}

private string demoTypedDS(int numOfRecords)

{

typedDS ds = new typedDS();

Stopwatch watch = Stopwatch.StartNew();

for (int i = 0; i < numOfRecords; i++)

{

ds.DemoTable.AddDemoTableRow(i, "demo name", "demo desc", d + i, DateTime.Now, DateTime.Now);

}

watch.Stop();

return watch.Elapsed.TotalSeconds.ToString();

}

private string demoPOCO(int numOfRecords)

{

BizObject tempObject;

Stopwatch watch = Stopwatch.StartNew();

for (int i = 0; i < numOfRecords; i++)

{

tempObject = new BizObject();

tempObject.Id = i;

tempObject.Name = "demo name";

tempObject.price = d + i;

tempObject.CreatedDt = DateTime.Now;

tempObject.ModifiedDt = DateTime.Now;

tempObject.Desc = "demo desc";

BizObjects.Add(tempObject);

}

watch.Stop();

return watch.Elapsed.TotalSeconds.ToString();

}

}

}

pardon my messy codes, i didn't want to create this project initially, but i want to be someone that talks the talk and walk the walk.Next, create a similar table in your database with following...

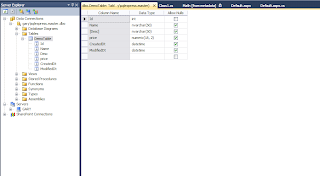

After that, right click on your solution file and click "Add new item" and select a dataset object.

Double click on the XSD file and go to your DB and drag-and-drop the table onto the XSD and you will get the table there..

The codes are very self explanatory and simple, not much re-using of codes especially @ the printing part.

I ran the test and get the results for POCO VS DS

Results are:

Typed Datasets

1: 4.1E-05

10: 5.47E-05

100: 0.0004515

1000: 0.0045912

10000: 0.0747852

100000: 0.6806366

1000000: 7.4931112

POCO:

1: 5.8E-06

10: 9.6E-06

100: 8.26E-05

1000: 0.0008128

10000: 0.0081756

100000: 0.1015654

1000000: 1.1922586

I tested it several times and dare to confirm that with this demo project, POCO is that Datasets in the lower range (1 to thousands) and the ratio justs exponential when the records reaches millions.

In this test, i only did instantiation and assignment, the Big O notation for:

Example in other languages:

public myClass()

{

private String someString;

public String getString()

{

return this.someString;

}

public void setString(String value)

{

this.someString = value;

}

}

you can do this in C#:

public myClass()

{

public String someString { get; set; }

}

That only explains how much it takes to write a Business Object class. Next we go to real work.

I created a simple demo project in VS 2010 (beta), you can use any visual studio to compile this if you want to try it out yourself.

in my Business object class:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace DemoBizObject

{

public class BizObject

{

public int Id { get; set; }

public string Name { get; set; }

public string Desc { get; set; }

public decimal price { get; set; }

public DateTime CreatedDt { get; set; }

public DateTime ModifiedDt { get; set; }

}

}

in my aspx code behind, i did this:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Web;

using System.Web.UI;

using System.Web.UI.WebControls;

using System.Collections.ObjectModel;

using DemoBizObject;

using System.Diagnostics;

using System.Web.UI.DataVisualization.Charting;

namespace DemoWebApp

{

public partial class _Default : System.Web.UI.Page

{

Collection<BizObject> BizObjects = new Collection<BizObject>();

double[] pocotimings = new double[7];

double[] typeddstimings = new double[7];

decimal d = 0.00m;

const int ONE = 1;

const int TEN = 10;

const int ONEHUNDRED = 100;

const int ONETHOUSAND = 1000;

const int TENTHOUSAND = 10000;

const int ONEHUNDREDTHOUSAND = 100000;

const int ONEMILLION = 1000000;

const string LINEBREAK = "<br />";

protected void Page_Load(object sender, EventArgs e)

{

}

protected void Button1_Click(object sender, EventArgs e)

{

this.Label1.Text += "Typed Datasets" + LINEBREAK + LINEBREAK;

this.Label1.Text += ONE +": "+ demoTypedDS(ONE)+ LINEBREAK;

this.Label1.Text += TEN + ": " + demoTypedDS(TEN) + LINEBREAK;

this.Label1.Text += ONEHUNDRED + ": " + demoTypedDS(ONEHUNDRED) + LINEBREAK;

this.Label1.Text += ONETHOUSAND + ": " + demoTypedDS(ONETHOUSAND) + LINEBREAK;

this.Label1.Text += TENTHOUSAND + ": " + demoTypedDS(TENTHOUSAND) + LINEBREAK;

this.Label1.Text += ONEHUNDREDTHOUSAND + ": " + demoTypedDS(ONEHUNDREDTHOUSAND) + LINEBREAK;

this.Label1.Text += ONEMILLION + ": " + demoTypedDS(ONEMILLION) + LINEBREAK + LINEBREAK;

this.Label1.Text += "POCO" + ": " + LINEBREAK + LINEBREAK;

this.Label1.Text += ONE + ": " + demoPOCO(ONE) + LINEBREAK;

this.Label1.Text += TEN + ": " + demoPOCO(TEN) + LINEBREAK;

this.Label1.Text += ONEHUNDRED + ": " + demoPOCO(ONEHUNDRED) + LINEBREAK;

this.Label1.Text += ONETHOUSAND + ": " + demoPOCO(ONETHOUSAND) + LINEBREAK;

this.Label1.Text += TENTHOUSAND + ": " + demoPOCO(TENTHOUSAND) + LINEBREAK;

this.Label1.Text += ONEHUNDREDTHOUSAND + ": " + demoPOCO(ONEHUNDREDTHOUSAND) + LINEBREAK;

this.Label1.Text += ONEMILLION + ": " + demoPOCO(ONEMILLION) + LINEBREAK + LINEBREAK;

}

private string demoTypedDS(int numOfRecords)

{

typedDS ds = new typedDS();

Stopwatch watch = Stopwatch.StartNew();

for (int i = 0; i < numOfRecords; i++)

{

ds.DemoTable.AddDemoTableRow(i, "demo name", "demo desc", d + i, DateTime.Now, DateTime.Now);

}

watch.Stop();

return watch.Elapsed.TotalSeconds.ToString();

}

private string demoPOCO(int numOfRecords)

{

BizObject tempObject;

Stopwatch watch = Stopwatch.StartNew();

for (int i = 0; i < numOfRecords; i++)

{

tempObject = new BizObject();

tempObject.Id = i;

tempObject.Name = "demo name";

tempObject.price = d + i;

tempObject.CreatedDt = DateTime.Now;

tempObject.ModifiedDt = DateTime.Now;

tempObject.Desc = "demo desc";

BizObjects.Add(tempObject);

}

watch.Stop();

return watch.Elapsed.TotalSeconds.ToString();

}

}

}

pardon my messy codes, i didn't want to create this project initially, but i want to be someone that talks the talk and walk the walk.Next, create a similar table in your database with following...

After that, right click on your solution file and click "Add new item" and select a dataset object.

Double click on the XSD file and go to your DB and drag-and-drop the table onto the XSD and you will get the table there..

The codes are very self explanatory and simple, not much re-using of codes especially @ the printing part.

I ran the test and get the results for POCO VS DS

Results are:

Typed Datasets

1: 4.1E-05

10: 5.47E-05

100: 0.0004515

1000: 0.0045912

10000: 0.0747852

100000: 0.6806366

1000000: 7.4931112

POCO:

1: 5.8E-06

10: 9.6E-06

100: 8.26E-05

1000: 0.0008128

10000: 0.0081756

100000: 0.1015654

1000000: 1.1922586

I tested it several times and dare to confirm that with this demo project, POCO is that Datasets in the lower range (1 to thousands) and the ratio justs exponential when the records reaches millions.

In this test, i only did instantiation and assignment, the Big O notation for:

- POCO = n Log n

- Dataset = C^n

Sunday, April 4, 2010

Performance bottleneck? review boxing & unboxing frequencies

There are times where our applications exceed users' response time threshold of 7s (usually), where all action must be completed under 7s.

Page-by-page code reviews are the last resort, but when you are resorting to that, look out of redundant boxing and unboxing of objects, check out: Technet

That's usually my case, or un-necessary objects declaration and recursion.

It's always better to go with iteration than recursion in terms of performance, as well as a typical For loop will outperform a foreach loop due to the boxing and unboxing overhead.

Check the traffic, CPU & memory utilization for resource bottleneck.

The last option is to review the entire architecture of the application (a PM's nightmare as that means a lot of time and resource needs to be pumped into the project). If good architectural designs was laid in place before development start, it wouldn't have to come to this point :)

Page-by-page code reviews are the last resort, but when you are resorting to that, look out of redundant boxing and unboxing of objects, check out: Technet

That's usually my case, or un-necessary objects declaration and recursion.

It's always better to go with iteration than recursion in terms of performance, as well as a typical For loop will outperform a foreach loop due to the boxing and unboxing overhead.

Check the traffic, CPU & memory utilization for resource bottleneck.

The last option is to review the entire architecture of the application (a PM's nightmare as that means a lot of time and resource needs to be pumped into the project). If good architectural designs was laid in place before development start, it wouldn't have to come to this point :)

Subscribe to:

Posts (Atom)